The lazy algorithm vs BIT

Back in my days of competitive coding - I realized that there are two kinds of competitive coders - one who know binary indexed trees (BIT) vs the ones who do not. I have to admit that BITs came very late into my arsenal - and even after that I did not have a sharp enough memory to reproduce BIT's code in a closed book programming contest.

Inadvertently, I had been using an alternative algorithmic approach which can only be named Laziness. Today, I would like to share it as well as subject it to some stress. Also, it's a good moment to refresh a key tenet of coding contests.

Find the simplest (& worst-performing) algorithm which solves the problem in the permitted time limit.

Binary Indexed Tree (BIT)

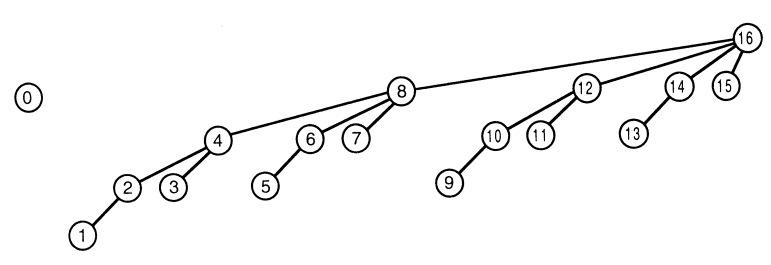

BIT is an insanely simple to code & equivalently complex to understand data structure. It generally comes at midpoint in a competitive coder's life & never comes back when a coder becomes a real life software engineer. A typical issue with BITs is that books & tutorials explain it as It is what it is & it works. Followed by mathematical proofs. I am yet to find a tutorial which explains BITs with a learning by discovery approach.

This article is not about details of BIT. We will start with the premise that the following problem is a BIT problem: range sum query - leetcode.

Given an integer array nums, handle multiple queries of the following types:

-> Update the value of an element in nums.

-> Calculate the sum of the elements of nums between indices left and right inclusive.

> Total Queries = Size of Arr ~ 3 x 10^4

> Permissible Time Limit: O(N * √N) Known acceptable solutions to the above problem:

- BITs: O(log N) updates & query.

- Segment Tree: O(log N) updates & query - but more complex to code.

- Sqrt Decomposition: O(√N) updates & query. This involves breaking down the array into segments of √N. Every segment always keeps an up-to-date sum of all its elements.

The sqrt solution qualifies because it's really hard to create contest problems which can accept N log(N) but not N √N solutions.

The Lazy Algorithm

We considered the three ways to solve the range sum query problem - which run within the given time limit. Let's circle back to the trivial case when there are no updates. In this case, the optimal way is to compute prefix sums. ie. compute another array pre containing cumulative sums of elements of original array, as shown below:

# THE PREFIX SUM METHOD

given arr = [a0, a1, ...]

# Compute an array of prefix sums.

# ith element stores the sum of first i + 1 elements

pre[i] = sum(arr[0:i]) = pre[i-1] + arr[i]

# Answer range query:

rangeSum(L, R) = pre[R] - pre[L-1]

## Deal with corner case of index = 0 separatelyThe prefix sum method + range sum problem have the following properties:

- Computing the pre[] array takes O(N) time.

- After pre computation, every rangeSum query takes O(1) time

- The problem can have O(N) updates. This would make our algorithm O(N*N).

So, what do we do? I will drop a hint: Amortization. A competitive coder should pause here & make an attempt to figure out the lazy-algorithm. Welcome laziness

Let us say we are lazy in propagating the updates to the pre[] array of prefix sums. Instead of propagating the updates; we just keep them in a pending list for a while.

Can u figure out a way to answer the rangeSum(1, 2) by using the stale-prefix array + looking into the pending list?

## The array & computed prefix sums array

arr = [1, 2, 3, 4, 5]

pre = [1, 3, 6, 10, 15]

## New updates requested - Lazy algorithm keeps them in pending list:

update(index = 0, value = 3)

update(index = 1, value = 9)

update(index = 3, value = 10)

arr would have become: [3, 9, 3, 10, 5]

## Range sum query comes up:

rangeSum(1, 2)

We shall use the precomputed array to get a stale answer & go through all elements of the pending list & apply the ones which affect the queried range:

- Stale rangeSum(1, 2) =

pre[2] - pre[0] = 6 - 1 = 5 - The list has an update for index = 1 which applies to the range(1, 2). The value of arr[1] used in stale sum = 2. Updated value = 9.

- Up to date rangeSum(1, 2) =

5 - 2 + 9 = 12.

Now, the only thing left to figure out is exactly how lazy can we be? Let the max size of pending list be L.

- Cost of being lazy = O(L) per range query.

- Cost of not-being-lazy = O(N) computation time. Happens once per L updates.

One can work out the math to see that the optimal size of L = √N. which will get the amortized cost of solving the range sum queries problem in O(N * √N).

Extending Laziness

What have we really achieved? A simple to code & understand lazy solution which can solve a few problems otherwise solvable by BITs, Segment trees or SQRT decomposition?

In order to truly appreciate laziness; we need to go further than the list of problems which have been already posed in programming contests. Here is one such problem which I have never seen in contests & it kicks asses of BITs & Segment Trees.

N queries need to be answered in order. The queries are of the following types:

-> newScore(score): New score is published.

-> rmScore(score): An old score is removed.

-> getRank(score): Get rank of a given score.

> N ~ 3 x 10^4.

> score: Integer. There shall not be duplicate published scores.

> Permissible Time Limit: O(N * √(N * log N)) Can u augment the laziness approach to solve it? Can u solve it a new creative way which beats the lazy approach? Please let me know.